Last month, google announced a rebranding/renaming of TensorFlow Lite to LiteRT (Link to article). It will now form part of their revamped attempt for trying to stay relevant in the Edge computation paradigm with Google AI Edge.

I had already made available TensorFlow Lite (together with numpy and OpenCV) through an app for educational purposes in the PLCnext store (Link to the app). However, the app restricts users to make use of the prepacked solution, even if they want to try something completely different or include other libraries.

Considering that I recently updated app and needed to refresh my memory on how to do it, I decided to create this post and explain how to cross compile TensorFlow Lite Runtime for the AXC F 2152.

The running process in the last step may appear quite convoluted since the publicly available libraries do not offer direct support for the processor architecture of the AXC F 2152 and therefore, cross compilation is necesary.

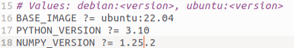

NOTE: This procedure was tested on:

- A virtualized UBUNTU 22.04 terminal.

- The Virtual Machine has 27 GB of RAM and a further swap file of 16GB of RAM [Highly relevant for the cross compilation process] and runs using 24 cores.

- A host machine with 32GB of RAM and a Core i7-12850HX processor.

NOTE 2: The amount of RAM allows to keep the cross compilation stable and to run as fast as possible. A setup with less RAM may not be able to run the cross-compilation process or it may take too long (up to some hours in my own experience).

- Download the source code of TensorFLow from the version you want to compile. https://github.com/tensorflow/tensorflow/releases/

- Extract the content to a folder of your choice. I will use “myfolder” in the next entries below.

- Navigate to "Makefile" in "

/myfolder/tensorflow-2.XX.0/tensorflow/lite/tools/pip_package/Makefile" and modify the base image, python version and numpy version to match those of your system. E.g.:

- navigate to "downloadtoolchains.sh" located in "

/myfolder/tensorflow-2.XX.0/tensorflow/lite/tools/cmake/download_toolchains.sh" and modify the flags that come by default for armh as shown here:

-

From within the TensorFlow folder (/myfolder/tensorflow-2.XX.0), run the command: "

make -C tensorflow/lite/tools/pip_package docker-build \ TENSORFLOW_TARGET=armhf PYTHON_VERSION=3.10" -

WARNING: The process of the previous step may take a lot of time, exercise patience.

-

After the process is finished, Take the Python wheel from "

tensorflow-2.XX.0/tensorflow/lite/tools/pip_package/gen/tflite_pip/python3.10/dist" to your controller and install it using pip.

NOTICE: In my experience the process works for Python 3.9, 3.10 and 3.11 and for releases 2.14 up to 2.16.2. However, releases above version 2.17 have a bug. I have an open issue in TensorFlow's GitHub repository that can be followed here. I hope it can be solved in further releases.

Leave a Reply

You must be logged in to post a comment.